| Firebird Documentation Index | Firebird Home |

|

Table of Contents

List of Figures

List of Tables

Table of Contents

The v.2.0 release cycle of Firebird brought a large collection of long-awaited enhancements under the hood that significantly improved performance, security and support for international languages. Several annoying limitations, along with a huge pile of old bugs inherited from the legacy code, have gone. Many of the command-line tools have been refurbished and this release introduces the all-new incremental backup tools NBak and NBackup.

The old “252 bytes or less” limit on index size is gone for good, replaced by much-extended limits that depend on page size. Calculation of index statistics has been revamped to improve the choices the optimizer has available, especially for complex outer join distributions and DISTINCT queries.

Many new additions have been made to the SQL language, including support for derived tables (SELECT ... FROM ( SELECT ... FROM), PSQL blocks in dynamic SQL through the new EXECUTE BLOCK syntax and some handy new extensions in PSQL itself.

This sub-release does not add any new functionality to the database engine. Several important bug-fixes that have turned up during development of versions 2.1.x and 2.5 have been backported.

Of special note are the fixes for the gfix validation and shutdown issues described below in the Known Issues for V.2.0.5. The Tracker ticket numbers are CORE-2271 and CORE-2846, respectively.

Note also few backported improvements that are present in this release:

The firebird.conf ConnectionTimeout can now be applied to XNET connections, to help with a specific slow connection problem on some Windows installations.

A backported optimizer improvement could help to speed up some complicated queries involving cross joins.

A backported nBackup improvement for POSIX platforms could help alleviate a reported problem of resource-gobbling during full backup.

This sub-release does not add any new functionality to the database engine. Several important bug-fixes that have turned up during development of versions 2.1.x and 2.5 have been backported.

A long-standing, legacy loophole in the handling of DPB parameters enabled ordinary users to make connection settings that could lead to database corruptions or give them access to SYSDBA-only operations. The loophole has been closed, a change that could affect several existing applications, database tools and connectivity layers (drivers, components). Details are in Chapter 3, Changes to the Firebird API and ODS.

It has been discovered that the gfix utility has a legacy bug (CORE-2271) that exhibits itself during the database validation/repair routines on large databases. The bug has been fixed in version 2.1.2 and affects all preceding versions of Firebird, including this sub-release. The privilege level of the user running these routines is checked too late in the operation, thus allowing a non-privileged user (i.e., not SYSDBA or Owner) to start a validation operation. Once the privilege check occurs, the database validation can be halted in mid-operation and thus be left unfinished, resulting in logical corruption that might not have been there otherwise.

It appears likely that this trouble occurs only with quite large databases: on small ones, the changes performed may complete before the privilege check.

Documentation has always stipulated that the SYSDBA or Owner must perform operations that do database-level changes. The gfix code was always meant to enforce this rule. If you have discovered this loophole yourself and have regarded it as “an undocumented feature” that allowed ordinary users to do validation and repair, then you are on notice. It is a bug and has been corrected in versions 2.1.2 and 2.5. It will be corrected in versions 2.0.6 and 1.5.6.

A regression issue surfaced with the implementation of the new gfix shutdown modes when shutdown is called with the -attach or -tran options. If connections are still alive when the specified timeout expires, the engine returns a message indicating that the shutdown was unsuccessful. However, instead of leaving the database in the online state, as it should, it puts the database into some uncertain “off-line” state and further connections are refused.

It affects all versions of Firebird up to and including v.2.0.5 and v.2.1.3, and all v.2.5 alphas, betas and release candidates. See Tracker ticket CORE-2846.

This sub-release does not add any new functionality to the database engine. Several important bugs have been fixed, including a number of unregistered nbackup bugs that were found to cause database corruptions under high-load conditions.

During Firebird 2.1 development it was discovered that Forced Writes had never worked on Linux, in either the InterBase or the Firebird era. That was fixed in V.2.1 and backported to this sub-release.

The issue with events over WNet protocol reported below for v.2.0.3 has been fixed. The full list of bugs fixed in V.2.0.4 is in the bugfixes chapter and also in the separate bug fixes document associated with V.2.1, which you can download from the Documentation Index at the Firebird website.

This sub-release does not add any new functionality to the database engine but fixes two significant bugs, one of which caused the v.2.0.2 sub-release to be recalled a week after it was released.

To all intents and purposes, therefore, this is the sub-release following sub-release 2.0.1. However, in the interim, the port of Firebird 2.0.3 to Solaris 2.10 (Solaris 10) has been completed for both Intel and SPARC platforms.

Please be sure to uninstall Firebird 2.0.2. It should not be necessary to revert databases to pre-2.0.2 state but, if you used EXECUTE STATEMENT to operate on varchars, varchar data written from results might have suffered truncation.

A regression appeared after v.2.0.1, whereby events cannot work across the WNet protocol. A call to isc_que_events() will cause the server to crash. (Tracker ID CORE-1460).

This sub-release does not add any new functionality to the database engine. It contains a number of fixes to bugs discovered since the v.2.0.1 sub-release.

Some minor improvements were made:

A port of Firebird 2.0.2 Classic for MacOSX on Intel was completed by Paul Beach and released.

In response to a situation reported in the Tracker as CORE-1148, whereby the Services API gave ordinary users access to the firebird.log, Alex Peshkoff made the log accessible only if the logged-in user is SYSDBA.

This sub-release does not add any new functionality to the database engine. It contains a number of fixes to bugs discovered since the release.

Minor improvements:

Gentoo or FreeBSD are now detected during configuration (Ref.: Tracker CORE-1047). Contributions by Alex Peshkoff and D. Petten.

It was discovered that the background garbage collector was unnecessarily reading back versions of active records (Ref.: Tracker CORE-1071). That was removed by Vlad Horsun.

Since Firebird 1.5.3, neither the relation name nor the alias was being returned for columns participating in a GROUP BY aggregation with joins. It has been fixed, particularly to assist the IB Object data access layer to properly support its column search features on output sets.

Bugfix (CORE-1133) "The XNET (IPC) communication protocol would not work across session boundaries" affects those attempting to access databases using the local protocol on Windows Vista as well as those using remote terminal services locally on XP or Server 2003. This fix, done in v.2.0.1, should remove the problems encountered under these conditions.

An important reversion to 1.5 behaviour has occurred in sub-release 2.0.1, as follows:

In Firebird 2.0, a deliberate restriction was imposed to prevent anyone from dropping, altering or recreating a PSQL module if it had been used since the database was opened. An attempt to prepare the DDL statement would result in an “Object in Use” exception.

Many people complained that the restriction was unacceptable because they depended on performing these metadata changes “on the fly”. The restriction has therefore been removed. However, the reversion in no way implies that performing DDL on active PSQL modules is “safer” in Firebird 2.0.1 and higher than it was in V.1.5.

If you are upgrading from V.2.0 or V.2.0.1 to V.2.0.2, please study the sections summarising the latest V.2.0.2.

If you are moving to Firebird 2.0.2 directly from Firebird 1.5.4 or lower versions, please take a moment to read on here and note some points about approaching this new release.

The on-disk structure (ODS) of the databases created under Firebird has changed. Although Firebird 2.0 will connect to databases having older ODS versions, most of the new features will not be available to them.

Make transportable gbak backups of your existing databases--including your old security.fdb or (even older) isc4.gdb security databases--before you uninstall the older Firebird server and set out to install Firebird 2.0. Before you proceed, restore these backups in a temporary location, using the old gbak, and verify that the backups are good.

Naturally, with so much bug-removal and closing of holes, there are sure to be things that worked before and now no longer work. A collection of Known Compatibility Issues is provided to assist you to work out what needs to be done in your existing system to make it compatible with Firebird 2.0.

Give special attention to the changes required in the area of user authentication.

In a couple of areas, planned implementations could not be completed for the v.2.0 release and will be deferred to later sub-releases:

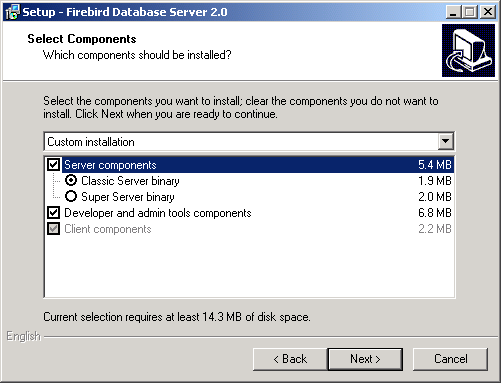

64-bit builds for both Superserver and Classic are ready and available for installing on Linux. Note that the 64-bit ports have been done and tested for AMD64 only. These builds should also work on Intel EM64T. The Intel IA-64 platform is not supported in this release. A FreeBSD port of the 64-bit builds has also been done.

Win64 hosts are running without problems and the MS VC8 final release seems to work satisfactorily, so we are able to say we are no longer hampered by problems with the Microsoft compiler. The Win64 port is complete and into testing, but is still considered experimental. It will become publicly available in a subsequent v.2.x release.

Although the capability to run multiple Firebird servers simultaneously on a single host has been present since Firebird 1.5, we still do not provide the ability to set them up through our installer programs and scripts.

During Firebird 2 development, a capability to create and access databases on raw devices on POSIX systems was enabled to assist an obscure platform port. To date it is undocumented, has not been subjected to rigorous QA or field testing and is known to present problems for calculating disk usage statistics. A Readme text will be made available in the CVS tree for those who wish to give it a try and would like to make a case for its becoming a feature in a future release.

If you think you have discovered a bug in this release, please make a point of reading the instructions for bug reporting in the article How to Report Bugs Effectively, at the Firebird Project website.

Follow these guidelines as you attempt to analyse your bug:

Write detailed bug reports, supplying the exact server model and build number of your Firebird kit. Also provide details of the OS platform. Include reproducible test data in your report and post it to our Tracker.

If you want to start a discussion thread about a bug or an implementation, do so by subscribing to the firebird-devel list and posting the best possible bug description you can.

Firebird-devel is not for discussing bugs in your software! If you are a novice with Firebird and need help with any issue, you can subscribe to the firebird-support list and email your questions to firebird-support@yahoogroups. com.

You can subscribe to this and numerous other Firebird-related support forums from the Lists and Newsgroups page at the Firebird website.

A full, integrated manual for Firebird 2.0 and preceding releases is well on the way, but it's not quite with us yet. Meanwhile, there is plenty of documentation around for Firebird if you know where to look. Study the Novices Guide and Knowledgebase pages at the Firebird website for links to papers and other documents to read on-line and/or download.

Don't overlook the materials in the /bin directory of your Firebird installation. In particular, make use of the Firebird 2.0 Quick Start Guide to help you get started.

The Firebird Project has an integral user documentation project, a team of volunteers who are writing, editing and adapting user manuals, white papers and HowTos. At the time of this release, the hard-working coordinator of the Docs project is Paul Vinkenoog.

An index of available documents can be found in the on-line documentation index. Published docs currently include the essential Quick Start Guides for Firebird versions 1.5 and 2.0 in English and several other languages.

For the official documentation we use a Docbook XML format for sources and build PDF and HTML output using a suite of Java utilities customised for our purposes. These notes were developed and built under this system.

Paul Vinkenoog has written comprehensive, easy-to-follow manuals for writing Firebird documentation and for using our tools. You can find links to these manuals in the aforementioned index. New team members who want to do some writing or translating are always more than welcome. For information about the team's activities and progress you can visit the Docs Project's homepage. We have a lab forum for documenters and translators, firebird-docs, which you can join by visiting the Lists and Newsgroups page at the Firebird web site.

These release notes are your main documentation for Firebird 2. However, if you are unfamiliar with previous Firebird versions, you will also need the release notes for Firebird 1.5.3. For convenience, copies of both sets of release notes are included in the binary kits. They will be installed in the /doc directory beneath the Firebird root directory. Several other useful README documents are also installed there.

For future reference, if you ever need to get a copy of the latest release notes before beginning installation, you can download them from the Firebird web site. The link can be found on the same page that linked you to the binary kits, towards the bottom of the page.

If you do not own a copy of The Firebird Book, by Helen Borrie, then you will also need to find the beta documentation for InterBase® 6.0. It consists of several volumes in PDF format, of which the most useful will be the Language Reference (LangRef.pdf) and the Data Definition Guide (DataDef.pdf). The Firebird Project is not allowed to distribute these documents but they are easily found at several download sites on the Web using Google and the search key "LangRef.pdf". When you find one, you usually find them all!

--The Firebird Project

Table of Contents

Implemented support for derived tables in DSQL (subqueries in FROM clause) as defined by SQL200X. A derived table is a set, derived from a dynamic SELECT statement. Derived tables can be nested, if required, to build complex queries and they can be involved in joins as though they were normal tables or views.

More details under Derived Tables in the DML chapter.

Multiple named (i.e. explicit) cursors are now supported in PSQL and in DSQL EXECUTE BLOCK statements. More information in the chapter Explicit Cursors.

Two significant changes have been made to the Windows-only protocols.-

Firebird 2.0 has replaced the former implementation of the local transport protocol (often referred to as IPC or IPServer) with a new one, named XNET.

It serves exactly the same goal, to provide an efficient way to connect to server located on the same machine as the connecting client without a remote node name in the connection string. The new implementation is different and addresses the known issues with the old protocol.

Like the old IPServer implementation, the XNET implementation uses shared memory for inter-process communication. However, XNET eliminates the use of window messages to deliver attachment requests and it also implements a different synchronization logic.

Besides providing a more robust protocol for local clients, the XNET protocol brings some notable benefits:

it works with Classic Server

it works for non-interactive services and terminal sessions

it eliminates lockups when a number of simultaneous connections are attempted

The XNET implementation should be similar to the old IPServer implementation, although XNET is expected to be slightly faster.

The one disadvantage is that the XNET and IPServer implementations are not compatible with each other. This makes it essential that your fbclient.dll version should match the version of the server binaries you are using (fbserver.exe or fb_inet_server.exe) exactly. It will not be possible to to establish a local connection if this detail is overlooked. (A TCP localhost loopback connection via an ill-matched client will still do the trick, of course).

WNET (a.k.a. NetBEUI) protocol no longer performs client impersonation.

In all previous Firebird versions, remote requests via WNET are performed in the context of the client security token. Since the server serves every connection according to its client security credentials, this means that, if the client machine is running some OS user from an NT domain, that user should have appropriate permissions to access the physical database file, UDF libraries, etc., on the server filesystem. This situation is contrary to what is generally regarded as proper for a client-server setup with a protected database.

Such impersonation has been removed in Firebird 2.0. WNET connections are now truly client-server and behave the same way as TCP ones, i.e., with no presumptions with regard to the rights of OS users.

Since Firebird 1.0 and earlier, the Superserver engine has performed background garbage collection, maintaining information about each new record version produced by an UPDATE or DELETE statement. As soon as the old versions are no longer “interesting”, i.e. when they become older than the Oldest Snapshot transaction (seen in the gstat -header output) the engine signals for them to be removed by the garbage collector.

Background GC eliminates the need to re-read the pages containing these versions via

a SELECT COUNT(*) FROM aTable or other table-scanning query from a user, as occurs in Classic

and in versions of InterBase prior to v.6.0. This earlier GC mechanism is known as cooperative garbage

collection.

Background GC also averts the possibility that those pages will be missed because they are seldom read. (A sweep, of course, would find those unused record versions and clear them, but the next sweep is not necessarily going to happen soon.) A further benefit is the reduction in I/O, because of the higher probability that subsequently requested pages still reside in the buffer cache.

Between the point where the engine notifies the garbage collector about a page containing unused versions and the point when the garbage collector gets around to reading that page, a new transaction could update a record on it. The garbage collector cannot clean up this record if this later transaction number is higher than the Oldest Snapshot or is still active. The engine again notifies the garbage collector about this page number, overriding the earlier notification about it and the garbage will be cleaned at some later time.

In Firebird 2.0 Superserver, both cooperative and background garbage collection are now possible. To manage it, the new configuration parameter GCPolicy was introduced. It can be set to:

cooperative - garbage collection will be performed only in cooperative mode (like Classic) and the engine will not track old record versions. This reverts GC behaviour to that of IB 5.6 and earlier. It is the only option for Classic.

background - garbage collection will be performed only by background threads, as is the case for Firebird 1.5 and earlier. User table-scan requests will not remove unused record versions but will cause the GC thread to be notified about any page where an unused record version is detected. The engine will also remember those page numbers where UPDATE and DELETE statements created back versions.

combined (the installation default for Superserver) - both background and cooperative garbage collection are performed.

The Classic server ignores this parameter and always works in “cooperative” mode.

Porting of the Services API to Classic architecture is now complete. All Services API functions are now available on both Linux and Windows Classic servers, with no limitations. Known issues with gsec error reporting in previous versions of Firebird are eliminated.

All Firebird versions provide two transaction wait modes: NO WAIT and WAIT. NO WAIT mode means that lock conflicts and deadlocks are reported immediately, while WAIT performs a blocking wait which times out only when the conflicting concurrent transaction ends by being committed or rolled back.

The new feature extends the WAIT mode by making provision to set a finite time interval to wait for the concurrent transactions. If the timeout has passed, an error (isc_lock_timeout) is reported.

Timeout intervals are specified per transaction, using the new TPB constant isc_tpb_lock_timeout in the API or, in DSQL, the LOCK TIMEOUT <value> clause of the SET TRANSACTION statement.

The operators now work correctly with BLOBs of any size. Issues with only the first segment being searched and with searches missing matches that straddle segment boundaries are now gone.

Pattern matching now uses a single-pass Knuth-Morris-Pratt algorithm, improving performance when complex patterns are used.

The engine no longer crashes when NULL is used as ESCAPE character for LIKE

A reworking has been done to resolve problems with views that are implicitly updatable, but still have update triggers. This is an important change that will affect systems written to take advantage of the undocumented [mis]behaviour in previous versions.

For details, see the notes in DDL Migration Issues in the Compatibility chapter of these notes.

Single-user and full shutdown modes are implemented using new [state] parameters for

the gfix -shut and gfix -online commands.

Syntax Pattern

gfix <command> [<state>] [<options>]

<command>> ::= {-shut | -online}

<state> ::= {normal | multi | single | full}

<options> ::= {[-force | -tran | -attach] <timeout>}

normal state = online database

multi state = multi-user shutdown mode (the legacy one, unlimited attachments of SYSDBA/owner are allowed)

single state = single-user shutdown (only one attachment is allowed, used by the restore process)

full state = full/exclusive shutdown (no attachments are allowed)

For more details, refer to the section on Gfix New Shutdown Modes, in the Utilities chapter. A regression surfaced affecting usage of these new shutdown modes, which is described in an alert in that topic.

For a list of shutdown state flag symbols and an example of usage, see Shutdown State in the API.

Ability to signal SQL NULL via a NULL pointer (see Signal SQL NULL in UDFs).

External function library ib_udf upgraded to allow the string functions ASCII_CHAR, LOWER, LPAD, LTRIM, RPAD, RTIM, SUBSTR and SUBSTRLEN to return NULL and have it interpreted correctly.

The script ib_udf_upgrade.sql can be applied to pre-v.2 databases that have these

functions declared, to upgrade them to work with the upgraded library. This script should be used only when

you are using the new ib_udf library with Firebird v2 and operation requests are modified to anticipate

nulls.

Compile-time checking for concatenation overflow has been replaced by run-time checking.

From Firebird 1.0 onward, concatenation operations have been checked for the possibility that the resulting string might exceed the string length limit of 32,000 bytes, i.e. overflow. This check was performed during the statement prepare, using the declared operand sizes and would throw an error for an expressions such as:

CAST('qwe' AS VARCHAR(30000)) || CAST('rty' AS VARCHAR(30000))

From Firebird 2.0 onward, this expression throws only a warning at prepare time and the overflow check is repeated at runtime, using the sizes of the actual operands. The result is that our example will be executed without errors being thrown. The isc_concat_overflow exception is now thrown only for actual overflows, thus bringing the behaviour of overflow detection for concatenation into line with that for arithmetic operations.

Lock contention in the lock manager and in the SuperServer thread pool manager has been reduced significantly

A rare race condition was detected and fixed, that could cause Superserver to hang during request processing until the arrival of the next request

Lock manager memory dumps have been made more informative and OWN_hung is detected correctly

Decoupling of lock manager synchronization objects for different engine instances was implemented

40-bit (64-bit internally) record enumerators have been introduced to overcome the ~30GB table size limit imposed by 32-bit record enumeration.

BUGCHECK log messages now include file name and line number. (A. Brinkman)

Routines that print out various internal structures (DSQL node tree, BLR, DYN, etc) have been updated. (N. Samofatov)

Thread-safe and signal-safe debug logging facilities have been implemented. (N. Samofatov)

Posix SS builds now handle SIGTERM and SIGINT to shutdown all connections gracefully. (A. Peshkov)

Invariant tracking in PSQL and request cloning logic were reworked to fix a number of issues with recursive procedures, for example SF bug #627057.

Invariant tracking is the process performed by the BLR compiler and the optimizer to decide whether an "invariant" (an expression, which might be a nested subquery) is independent from the parent context. It is used to perform one-time evaluations of such expressions and then cache the result.

If some invariant is not determined, we lose in performance. If some variant is wrongly treated as invariant, we see wrong results.

Example

select * from rdb$relations

where rdb$relation_id <

( select rdb$relation_id from rdb$database )

This query performs only one fetch from rdb$database instead of evaluating the subquery for every row of rdb$relations.

Firebird 2.0 adds an optional RETAIN clause to the DSQL ROLLBACK

statement to make it consistent with COMMIT [RETAIN].

See ROLLBACK RETAIN Syntax in the chapter about DML.

The root directory lookup path has changed so that server processes on Windows no longer use the Registry.

The command-line utilities still check the Registry.

Better cost-based calculation has been included in the optimizer routines.

The new On-Disk Structure (ODS) is ODS11.

For more information, see the chapter ODS Changes.

Table of Contents

Some other needed changes have been performed in the Firebird API. They include.-

From v.2.0.5 and v2.1.2 onward

Several DPB parameters have been

made inaccessible to ordinary users, closing some dangerous loopholes. In some cases, they are settings

that would alter the database header settings and potentially cause corruptions if not performed under

administrator control; in others, they initiate operations that are otherwise restricted to the SYSDBA.

They are.-

isc_dpb_shutdown and isc_dpb_online

isc_dpb_gbak_attach, isc_dpb_gfix_attach and isc_dpb_gstat_attach

isc_dpb_verify

isc_dpb_no_db_triggers

isc_dpb_set_db_sql_dialect

isc_dpb_sweep_interval

isc_dpb_force_write

isc_dpb_no_reserve

isc_dpb_set_db_readonly

isc_dpb_set_page_buffers (on Superserver)

The parameter isc_dpb_set_page_buffers can still be used by ordinary users on Classic and it will set the buffer size temporarily for that user and that session

only. When used by the SYSDBA on either Superserver or Classic, it will change the buffer count in the database header, i.e.,

make a permanent change to the default buffer size.

This change will affect any of the listed DPB parameters that have been explicitly set, either by including them in the DPB implementation by default property values or by enabling them in tools and applications that access databases as ordinary users. For example, a Delphi application that included 'RESERVE PAGE SPACE=TRUE' and 'FORCED WRITES=TRUE' in its database Params property, which caused no problems when the application connected to Firebird 1.x, 2.0.x or 2.1.0/2.1.1, now rejects a connection by a non-SYSDBA user with ISC ERROR CODE 335544788, “Unable to perform operation. You must be either SYSDBA or owner of the database.”

The API header file, ibase.h has been subjected to a cleanup. with the result that public headers no longer contain private declarations.

The new feature extends the WAIT mode by making provision to set a finite time interval to wait for the concurrent transactions. If the timeout has passed, an error (isc_lock_timeout) is reported.

Timeout intervals can now be specified per transaction, using the new TPB constant isc_tpb_lock_timeout in the API.

The DSQL equivalent is implemented via the LOCK TIMEOUT <value> clause of the SET TRANSACTION statement.

The function call isc_dsql_sql_info() has been extended to enable relation aliases to be retrieved, if required.

isc_blob_lookup_desc() now also describes blobs that are outputs of stored procedures

The macro definition FB_API_VER is added to ibase.h to indicate the current API

version. The number corresponds to the appropriate Firebird version.

The current value of FB_API_VER is 20 (two-digit equivalent of 2.0). This macro can be used by client applications to check the version of ibase.h its being compiled with.

The following items have been added to the isc_database_info() function call structure:

The following items have been added to the isc_transaction_info() function call structure:

Returns the number of the oldest [interesting] transaction when the current transaction started. For snapshot transactions, this is also the number of the oldest transaction in the private copy of the transaction inventory page (TIP).

For a read-committed transaction, returns the number of the current transaction.

For all other transactions, returns the number of the oldest active transaction when the current transaction started.

Returns the number of the lowest tra_oldest_active of all

transactions that were active when the current transaction started.

This value is used as the threshold ("high-water mark") for garbage collection.

Returns the isolation level of the current transaction. The format of the returned clumplets is:

isc_info_tra_isolation,

1, isc_info_tra_consistency | isc_info_tra_concurrency |

2, isc_info_tra_read_committed,

isc_info_tra_no_rec_version | isc_info_tra_rec_version

That is, for Read Committed transactions, two items are returned (isolation level and record versioning policy) while, for other transactions, one item is returned (isolation level).

Returns the access mode (read-only or read-write) of the current transaction. The format of the returned clumplets is:

isc_info_tra_access, 1, isc_info_tra_readonly | isc_info_tra_readwrite

The following improvements have been added to the Services API:

Services are now executed as threads rather than processes on some threadable CS builds (currently 32- bit Windows and Solaris).

The new function fb_interpret() replaces the former

isc_interprete() for extracting the text for a Firebird error message from the error status vector to a

client buffer.

isc_interprete() is vulnerable to overruns and is deprecated as unsafe. The new function should be used instead.

API Access to database shutdown is through flags appended to the isc_dpb_shutdown parameter in the DBP argument passed to isc_attach_database(). The symbols for the <state> flags are:

#define isc_dpb_shut_cache 0x1

#define isc_dpb_shut_attachment 0x2

#define isc_dpb_shut_transaction 0x4

#define isc_dpb_shut_force 0x8

#define isc_dpb_shut_mode_mask 0x70

#define isc_dpb_shut_default 0x0

#define isc_dpb_shut_normal 0x10

#define isc_dpb_shut_multi 0x20

#define isc_dpb_shut_single 0x30

#define isc_dpb_shut_full 0x40

Example of Use in C/C++

char dpb_buffer[256], *dpb, *p; ISC_STATUS status_vector[ISC_STATUS_LENGTH]; isc_db_handle handle = NULL; dpb = dpb_buffer; *dpb++ = isc_dpb_version1; const char* user_name = “SYSDBA”; const int user_name_length = strlen(user_name); *dpb++ = isc_dpb_user_name; *dpb++ = user_name_length; memcpy(dpb, user_name, user_name_length); dpb += user_name_length; const char* user_password = “masterkey”; const int user_password_length = strlen(user_password); *dpb++ = isc_dpb_password; *dpb++ = user_password_length; memcpy(dpb, user_password, user_password_length); dpb += user_password_length; // Force an immediate full database shutdown *dpb++ = isc_dpb_shutdown; *dpb++ = isc_dpb_shut_force | isc_dpb_shut_full; const int dpb_length = dpb - dpb_buffer; isc_attach_database(status_vector, 0, “employee.db”, &handle, dpb_length, dpb_buffer); if (status_vector[0] == 1 && status_vector[1]) { isc_print_status(status_vector); } else { isc_detach_database(status_vector, &handle); }

On-disk structure (ODS) changes include the following:

Maximum size of exception messages raised from 78 to 1021 bytes.

Added RDB$DESCRIPTION to RDB$GENERATORS, so now you can include description text when creating generators.

Added RDB$DESCRIPTION and RDB$SYSTEM_FLAG to RDB$ROLES to allow description text and to flag user-defined roles, respectively.

Introduced a concept of ODS type to distinguish between InterBase and Firebird databases.

The DSQL parser will now try to report the line and column number of an incomplete statement.

A new column RDB$STATISTICS has been added to the system table RDB$INDEX_SEGMENTS to store the per-segment selectivity values for multi-key indexes.

The column of the same name in RDB$INDICES is kept for compatibility and still represents the total index selectivity, that is used for a full index match.

Table of Contents

The following statement syntaxes and structures have been added to Firebird 2:

SEQUENCE has been introduced as a synonym for GENERATOR, in accordance with SQL-99. SEQUENCE is a syntax term described in the SQL specification, whereas GENERATOR is a legacy InterBase syntax term. Use of the standard SEQUENCE syntax in your applications is recommended.

A sequence generator is a mechanism for generating successive exact numeric values, one at a time. A sequence generator is a named schema object. In dialect 3 it is a BIGINT, in dialect 1 it is an INTEGER.

Syntax patterns

CREATE { SEQUENCE | GENERATOR } <name>

DROP { SEQUENCE | GENERATOR } <name>

SET GENERATOR <name> TO <start_value>

ALTER SEQUENCE <name> RESTART WITH <start_value>

GEN_ID (<name>, <increment_value>)

NEXT VALUE FOR <name>

Examples

1.

CREATE SEQUENCE S_EMPLOYEE;

2.

ALTER SEQUENCE S_EMPLOYEE RESTART WITH 0;

See also the notes about NEXT VALUE FOR.

ALTER SEQUENCE, like SET GENERATOR, is a good way to screw up the generation of key values!

SYSDBA, the database creator or the owner of an object can grant rights on that object to other users. However, those rights can be made inheritable, too. By using WITH GRANT OPTION, the grantor gives the grantee the right to become a grantor of the same rights in turn. This ability can be removed by the original grantor with REVOKE GRANT OPTION FROM user.

However, there's a second form that involves roles. Instead of specifying the same rights for many users (soon it becomes a maintenance nightmare) you can create a role, assign a package of rights to that role and then grant the role to one or more users. Any change to the role's rights affect all those users.

By using WITH ADMIN OPTION, the grantor (typically the role creator) gives the grantee the right to become a grantor of the same role in turn. Until FB v2, this ability couldn't be removed unless the original grantor fiddled with system tables directly. Now, the ability to grant the role can be removed by the original grantor with REVOKE ADMIN OPTION FROM user.

Domains allow their defaults to be changed or dropped. It seems natural that table fields can be manipulated the same way without going directly to the system tables.

Syntax Pattern

ALTER TABLE t ALTER [COLUMN] c SET DEFAULT default_value;

ALTER TABLE t ALTER [COLUMN] c DROP DEFAULT;

Array fields cannot have a default value.

If you change the type of a field, the default may remain in place. This is because a field can be given the type of a domain with a default but the field itself can override such domain. On the other hand, the field can be given a type directly in whose case the default belongs logically to the field (albeit the information is kept on an implicit domain created behind scenes).

The DDL statements RECREATE EXCEPTION and CREATE OR ALTER EXCEPTION (feature request SF #1167973) have been implemented, allowing either creating, recreating or altering an exception, depending on whether it already exists.

RECREATE EXCEPTION is exactly like CREATE EXCEPTION if the exception does not already exist. If it does exist, its definition will be completely replaced, if there are no dependencies on it.

ALTER EXTERNAL FUNCTION has been implemented, to enable the entry_point or the

module_name to be changed when the UDF declaration cannot be dropped due to existing

dependencies.

The COMMENT statement has been implemented for setting metadata descriptions.

Syntax Pattern

COMMENT ON DATABASE IS {'txt'|NULL};

COMMENT ON <basic_type> name IS {'txt'|NULL};

COMMENT ON COLUMN tblviewname.fieldname IS {'txt'|NULL};

COMMENT ON PARAMETER procname.parname IS {'txt'|NULL};

An empty literal string '' will act as NULL since the internal code (DYN in this case) works this way with blobs.

<basic_type>:

DOMAIN

TABLE

VIEW

PROCEDURE

TRIGGER

EXTERNAL FUNCTION

FILTER

EXCEPTION

GENERATOR

SEQUENCE

INDEX

ROLE

CHARACTER SET

COLLATION

SECURITY CLASS1

1not implemented, because this type is hidden.

FIRST/SKIP and ROWS syntaxes and PLAN and ORDER BY clauses can now be used in view specifications.

From Firebird 2.0 onward, views are treated as fully-featured SELECT expressions. Consequently, the clauses FIRST/SKIP, ROWS, UNION, ORDER BY and PLAN are now allowed in views and work as expected.

Syntax

For syntax details, refer to Select Statement & Expression Syntax in the chapter about DML.

The DDL statement RECREATE TRIGGER statement is now available in DDL. Semantics are the same as for other RECREATE statements.

The following changes will affect usage or existing, pre-Firebird 2 workarounds in existing applications or databases to some degree.

Now it is possible to create foreign key constraints without needing to get an exclusive lock on the whole database.

Apply NOT NULL constraints to base tables only, ignoring the ones inherited by view columns from domain definitions.

Previously, the only allowed syntax for declaring a blob filter was:

declare filter <name> input_type <number> output_type <number>

entry_point <function_in_library> module_name <library_name>;

The alternative new syntax is:

declare filter <name> input_type <mnemonic> output_type <mnemonic>

entry_point <function_in_library> module_name <library_name>;

where <mnemonic> refers to a subtype identifier known to the engine.

Initially they are binary, text and others mostly for internal usage, but an adventurous user could write a new mnemonic in rdb$types and use it, since it is parsed only at declaration time. The engine keeps the numerical value. Remember, only negative subtype values are meant to be defined by users.

To get the predefined types, do

select RDB$TYPE, RDB$TYPE_NAME, RDB$SYSTEM_FLAG

from rdb$types

where rdb$field_name = 'RDB$FIELD_SUB_TYPE';

RDB$TYPE RDB$TYPE_NAME RDB$SYSTEM_FLAG

========= ============================ =================

0 BINARY 1

1 TEXT 1

2 BLR 1

3 ACL 1

4 RANGES 1

5 SUMMARY 1

6 FORMAT 1

7 TRANSACTION_DESCRIPTION 1

8 EXTERNAL_FILE_DESCRIPTION 1

Examples

Original declaration:

declare filter pesh input_type 0 output_type 3

entry_point 'f' module_name 'p';

Alternative declaration:

declare filter pesh input_type binary output_type acl

entry_point 'f' module_name 'p';

Declaring a name for a user defined blob subtype (remember to commit after the insertion):

SQL> insert into rdb$types

CON> values('RDB$FIELD_SUB_TYPE', -100, 'XDR', 'test type', 0);

SQL> commit;

SQL> declare filter pesh2 input_type xdr output_type text

CON> entry_point 'p2' module_name 'p';

SQL> show filter pesh2;

BLOB Filter: PESH2

Input subtype: -100 Output subtype: 1

Filter library is p

Entry point is p2

Table of Contents

In this section are details of DML language statements or constructs that have been added to the DSQL language set in Firebird 2.0.

The SQL language extension EXECUTE BLOCK makes "dynamic PSQL" available to SELECT specifications. It has the effect of allowing a self-contained block of PSQL code to be executed in dynamic SQL as if it were a stored procedure.

Syntax pattern

EXECUTE BLOCK [ (param datatype = ?, param datatype = ?, ...) ]

[ RETURNS (param datatype, param datatype, ...) ]

AS

[DECLARE VARIABLE var datatype; ...]

BEGIN

...

END

For the client, the call isc_dsql_sql_info with the parameter

isc_info_sql_stmt_type returns

isc_info_sql_stmt_select if the block has output parameters.

The semantics of a call is similar to a SELECT query: the client has a cursor open, can fetch data from it,

and must close it after use.

isc_info_sql_stmt_exec_procedure if the block has no output parameters.

The semantics of a call is similar to an EXECUTE query: the client has no cursor and execution continues until

it reaches the end of the block or is terminated by a SUSPEND.

The client should preprocess only the head of the SQL statement or use '?' instead of ':' as the parameter indicator because, in the body of the block, there may be references to local variables or arguments with a colon prefixed.

Example

The user SQL is

EXECUTE BLOCK (X INTEGER = :X)

RETURNS (Y VARCHAR)

AS

DECLARE V INTEGER;

BEGIN

INSERT INTO T(...) VALUES (... :X ...);

SELECT ... FROM T INTO :Y;

SUSPEND;

END

The preprocessed SQL is

EXECUTE BLOCK (X INTEGER = ?)

RETURNS (Y VARCHAR)

AS

DECLARE V INTEGER;

BEGIN

INSERT INTO T(...) VALUES (... :X ...);

SELECT ... FROM T INTO :Y;

SUSPEND;

END

Implemented support for derived tables in DSQL (subqueries in FROM clause) as defined by SQL200X. A derived table is a set, derived from a dynamic SELECT statement. Derived tables can be nested, if required, to build complex queries and they can be involved in joins as though they were normal tables or views.

Syntax Pattern

SELECT

<select list>

FROM

<table reference list>

<table reference list> ::= <table reference> [{<comma> <table reference>}...]

<table reference> ::=

<table primary>

| <joined table>

<table primary> ::=

<table> [[AS] <correlation name>]

| <derived table>

<derived table> ::=

<query expression> [[AS] <correlation name>]

[<left paren> <derived column list> <right paren>]

<derived column list> ::= <column name> [{<comma> <column name>}...]

Examples

a) Simple derived table:

SELECT

*

FROM

(SELECT

RDB$RELATION_NAME, RDB$RELATION_ID

FROM

RDB$RELATIONS) AS R (RELATION_NAME, RELATION_ID)

b) Aggregate on a derived table which also contains an aggregate

SELECT

DT.FIELDS,

Count(*)

FROM

(SELECT

R.RDB$RELATION_NAME,

Count(*)

FROM

RDB$RELATIONS R

JOIN RDB$RELATION_FIELDS RF ON (RF.RDB$RELATION_NAME = R.RDB$RELATION_NAME)

GROUP BY

R.RDB$RELATION_NAME) AS DT (RELATION_NAME, FIELDS)

GROUP BY

DT.FIELDS

c) UNION and ORDER BY example:

SELECT

DT.*

FROM

(SELECT

R.RDB$RELATION_NAME,

R.RDB$RELATION_ID

FROM

RDB$RELATIONS R

UNION ALL

SELECT

R.RDB$OWNER_NAME,

R.RDB$RELATION_ID

FROM

RDB$RELATIONS R

ORDER BY

2) AS DT

WHERE

DT.RDB$RELATION_ID <= 4

Points to Note

Every column in the derived table must have a name. Unnamed expressions like constants should be added with an alias or the column list should be used.

The number of columns in the column list should be the same as the number of columns from the query expression.

The optimizer can handle a derived table very efficiently. However, if the derived table is involved in an inner join and contains a subquery, then no join order can be made.

The ROLLBACK RETAIN statement is now supported in DSQL.

A “rollback retaining” feature was introduced in InterBase 6.0, but this rollback mode

could be used only via an API call to isc_rollback_retaining(). By contrast,

“commit retaining” could be used either via an API call to

isc_commit_retaining() or by using a DSQL COMMIT RETAIN

statement.

Firebird 2.0 adds an optional RETAIN clause to the DSQL ROLLBACK

statement to make it consistent with COMMIT [RETAIN].

Syntax pattern: follows that of COMMIT RETAIN.

ROWS syntax is used to limit the number of rows retrieved from a select expression. For an uppermost-level select statement, it would specify the number of rows to be returned to the host program. A more understandable alternative to the FIRST/SKIP clauses, the ROWS syntax accords with the latest SQL standard and brings some extra benefits. It can be used in unions, any kind of subquery and in UPDATE or DELETE statements.

It is available in both DSQL and PSQL.

Syntax Pattern

SELECT ...

[ORDER BY <expr_list>]

ROWS <expr1> [TO <expr2>]

Examples

1.

SELECT * FROM T1

UNION ALL

SELECT * FROM T2

ORDER BY COL

ROWS 10 TO 100

2.

SELECT COL1, COL2,

( SELECT COL3 FROM T3 ORDER BY COL4 DESC ROWS 1 )

FROM T4

3.

DELETE FROM T5

ORDER BY COL5

ROWS 1

Points to Note

When <expr2> is omitted, then ROWS <expr1> is semantically equivalent to FIRST <expr1>. When both <expr1> and <expr2> are used, then ROWS <expr1> TO <expr2> means the same as FIRST (<expr2> - <expr1> + 1) SKIP (<expr1> - 1)

There is nothing that is semantically equivalent to a SKIP clause used without a FIRST clause.

The rules for UNION queries have been improved as follows:

UNION DISTINCT is now allowed as a synonym for simple UNION, in accordance with the SQL-99 specification. It is a minor change: DISTINCT is the default mode, according to the standard. Formerly, Firebird did not support the explicit inclusion of the optional keyword DISTINCT.

Syntax Pattern

UNION [{DISTINCT | ALL}]

Automatic type coercion logic between subsets of a union is now more intelligent. Resolution of the data type of the result of an aggregation over values of compatible data types, such as case expressions and columns at the same position in a union query expression, now uses smarter rules.

Syntax Rules

Let DTS be the set of data types over which we must determine the final result data type.

All of the data types in DTS shall be comparable.

Case:

If any of the data types in DTS is character string, then:

If any of the data types in DTS is variable-length character string, then the result data type is variable-length character string with maximum length in characters equal to the largest maximum amongst the data types in DTS.

Otherwise, the result data type is fixed-length character string with length in characters equal to the maximum of the lengths in characters of the data types in DTS.

The characterset/collation is used from the first character string data type in DTS.

If all of the data types in DTS are exact numeric, then the result data type is exact numeric with scale equal to the maximum of the scales of the data types in DTS and the maximum precision of all data types in DTS.

NOTE :: Checking for precision overflows is done at run-time only. The developer should take measures to avoid the aggregation resolving to a precision overflow.

If any data type in DTS is approximate numeric, then each data type in DTS shall be numeric else an error is thrown.

If some data type in DTS is a date/time data type, then every data type in DTS shall be a date/time data type having the same date/time type.

If any data type in DTS is BLOB, then each data type in DTS shall be BLOB and all with the same sub-type.

IIF (<search_condition>, <value1>, <value2>)

is implemented as a shortcut for

CASE

WHEN <search_condition> THEN <value1>

ELSE <value2>

END

It returns the value of the first sub-expression if the given search condition evaluates to TRUE, otherwise it returns a value of the second sub-expression.

Example

SELECT IIF(VAL > 0, VAL, -VAL) FROM OPERATION

The infamous “Datatype unknown” error (SF Bug #1371274) when attempting some castings has been eliminated. It is now possible to use CAST to advise the engine about the data type of a parameter.

Example

SELECT CAST(? AS INT) FROM RDB$DATABASE

The built-in function SUBSTRING() can now take arbitrary expressions in its parameters.

Formerly, the inbuilt SUBSTRING() function accepted only constants as its second and third arguments (start position and length, respectively). Now, the arguments can be anything that resolves to a value, including host parameters, function results, expressions, subqueries, etc.

The length of the resulting column is the same as the length of the first argument. This means that, in the following

x = varchar(50);

substring(x from 1 for 1);

the new column has a length of 50, not 1. (Thank the SQL standards committee!)

The following features involving NULL in DSQL have been implemented:

A new equivalence predicate behaves exactly like the equality/inequality predicates, but, instead of testing for equality, it tests whether one operand is distinct from the other.

Thus, IS NOT DISTINCT treats (NULL equals NULL) as if it were true, since one NULL (or expression resolving to NULL) is not distinct from another. It is available in both DSQL and PSQL.

Syntax Pattern

<value> IS [NOT] DISTINCT FROM <value>

Examples

1.

SELECT * FROM T1

JOIN T2

ON T1.NAME IS NOT DISTINCT FROM T2.NAME;

2.

SELECT * FROM T

WHERE T.MARK IS DISTINCT FROM 'test';

Points to note

Because the DISTINCT predicate considers that two NULL values are not distinct, it never evaluates to the truth value UNKNOWN. Like the IS [NOT] NULL predicate, it can only be True or False.

The NOT DISTINCT predicate can be optimized using an index, if one is available.

A NULL literal can now be treated as a value in all expressions without returning a syntax error. You may now specify expressions such as

A = NULL

B > NULL

A + NULL

B || NULL

All such expressions evaluate to NULL. The change does not alter nullability-aware semantics of the engine, it simply relaxes the syntax restrictions a little.

Placement of nulls in an ordered set has been changed to accord with the SQL standard that null ordering be consistent, i.e. if ASC[ENDING] order puts them at the bottom, then DESC[ENDING] puts them at the top; or vice-versa. This applies only to databases created under the new on-disk structure, since it needs to use the index changes in order to work.

If you override the default nulls placement, no index can be used for sorting. That is, no index will be used for an ASCENDING sort if NULLS LAST is specified, nor for a DESCENDING sort if NULLS FIRST is specified.

Examples

Database: proc.fdb

SQL> create table gnull(a int);

SQL> insert into gnull values(null);

SQL> insert into gnull values(1);

SQL> select a from gnull order by a;

A

============

<null>

1

SQL> select a from gnull order by a asc;

A

============

<null>

1

SQL> select a from gnull order by a desc;

A

============

1

<null>

SQL> select a from gnull order by a asc nulls first;

A

============

<null>

1

SQL> select a from gnull order by a asc nulls last;

A

============

1

<null>

SQL> select a from gnull order by a desc nulls last;

A

============

1

<null>

SQL> select a from gnull order by a desc nulls first;

A

============

<null>

1

CROSS JOIN is now supported. Logically, this syntax pattern:

A CROSS JOIN B

is equivalent to either of the following:

A INNER JOIN B ON 1 = 1

or, simply:

FROM A, B

(V.2.0.6) In the rare case where a cross join of three or more tables involved table[s] that contained no records, extremely slow performance was reported (CORE-2200). A performance improvement was gained by teaching the optimizer not to waste time and effort on walking through populated tables in an attempt to find matches in empty tables. (Backported from V.2.1.2)

SELECT specifications used in subqueries and in INSERT INTO <insert-specification> SELECT.. statements can now specify a UNION set.

ROWS specifications and PLAN and ORDER BY clauses can now be used in UPDATE and DELETE statements.

Users can now specify explicit plans for UPDATE/DELETE statements in order to optimize them manually. It is also possible to limit the number of affected rows with a ROWS clause, optionally used in combination with an ORDER BY clause to have a sorted recordset.

Syntax Pattern

UPDATE ... SET ... WHERE ...

[PLAN <plan items>]

[ORDER BY <value list>]

[ROWS <value> [TO <value>]]

or

DELETE ... FROM ...

[PLAN <plan items>]

[ORDER BY <value list>]

[ROWS <value> [TO <value>]]

A number of new facilities have been added to extend the context information that can be retrieved:

The context variable CURRENT_TIMESTAMP and the date/time literal 'NOW' will now return the sub-second time part in milliseconds.

CURRENT_TIME and CURRENT_TIMESTAMP now optionally allow seconds precision

The feature is available in both DSQL and PSQL.

Syntax Pattern

CURRENT_TIME [(<seconds precision>)]

CURRENT_TIMESTAMP [(<seconds precision>)]

Examples

1. SELECT CURRENT_TIME FROM RDB$DATABASE;

2. SELECT CURRENT_TIME(3) FROM RDB$DATABASE;

3. SELECT CURRENT_TIMESTAMP(3) FROM RDB$DATABASE;

The maximum possible precision is 3 which means accuracy of 1/1000 second (one millisecond). This accuracy may be improved in the future versions.

If no seconds precision is specified, the following values are implicit:

0 for CURRENT_TIME

3 for CURRENT_TIMESTAMP

Values of context variables can now be obtained using the system functions RDB$GET_CONTEXT and RDB$SET_CONTEXT. These new built-in functions give access through SQL to some information about the current connection and current transaction. They also provide a mechanism to retrieve user context data and associate it with the transaction or connection.

Syntax Pattern

RDB$SET_CONTEXT( <namespace>, <variable>, <value> )

RDB$GET_CONTEXT( <namespace>, <variable> )

These functions are really a form of external function that exists inside the database intead of being called from a dynamically loaded library. The following declarations are made automatically by the engine at database creation time:

Declaration

DECLARE EXTERNAL FUNCTION RDB$GET_CONTEXT

VARCHAR(80),

VARCHAR(80)

RETURNS VARCHAR(255) FREE_IT;

DECLARE EXTERNAL FUNCTION RDB$SET_CONTEXT

VARCHAR(80),

VARCHAR(80),

VARCHAR(255)

RETURNS INTEGER BY VALUE;

Usage

RDB$SET_CONTEXT and RDB$GET_CONTEXT set and retrieve the current value of a context variable. Groups of context variables with similar properties are identified by Namespace identifiers. The namespace determines the usage rules, such as whether the variables may be read and written to, and by whom.

Namespace and variable names are case-sensitive.

RDB$GET_CONTEXT retrieves current value of a variable. If the variable does not exist in namespace, the function returns NULL.

RDB$SET_CONTEXT sets a value for specific variable, if it is writable. The function returns a value of 1 if the variable existed before the call and 0 otherwise.

To delete a variable from a context, set its value to NULL.

A fixed number of pre-defined namespaces is available:

Offers access to session-specific user-defined variables. You can define and set values for variables with any name in this context.

Provides read-only access to the following variables:

NETWORK_PROTOCOL :: The network protocol used by client to connect. Currently used values: “TCPv4”, “WNET”, “XNET” and NULL.

CLIENT_ADDRESS :: The wire protocol address of the remote client, represented as a string. The value is an IP address in form "xxx.xxx.xxx.xxx" for TCPv4 protocol; the local process ID for XNET protocol; and NULL for any other protocol.

DB_NAME :: Canonical name of the current database. It is either the alias name (if connection via file names is disallowed DatabaseAccess = NONE) or, otherwise, the fully expanded database file name.

ISOLATION_LEVEL :: The isolation level of the current transaction. The returned value will be one of "READ COMMITTED", "SNAPSHOT", "CONSISTENCY".

TRANSACTION_ID :: The numeric ID of the current transaction. The returned value is the same as would be returned by the CURRENT_TRANSACTION pseudo-variable.

SESSION_ID :: The numeric ID of the current session. The returned value is the same as would be returned by the CURRENT_CONNECTION pseudo-variable.

CURRENT_USER :: The current user. The returned value is the same as would be returned by the CURRENT_USER pseudo-variable or the predefined variable USER.

CURRENT_ROLE :: Current role for the connection. Returns the same value as the CURRENT_ROLE pseudo-variable.

To avoid DoS attacks against the Firebird Server, the number of variables stored for each transaction or session context is limited to 1000.

Example of Use

set term ^;

create procedure set_context(User_ID varchar(40), Trn_ID integer) as

begin

RDB$SET_CONTEXT('USER_TRANSACTION', 'Trn_ID', Trn_ID);

RDB$SET_CONTEXT('USER_TRANSACTION', 'User_ID', User_ID);

end ^

create table journal (

jrn_id integer not null primary key,

jrn_lastuser varchar(40),

jrn_lastaddr varchar(255),

jrn_lasttransaction integer

)^

CREATE TRIGGER UI_JOURNAL FOR JOURNAL BEFORE INSERT OR UPDATE

as

begin

new.jrn_lastuser = rdb$get_context('USER_TRANSACTION', 'User_ID');

new.jrn_lastaddr = rdb$get_context('SYSTEM', 'CLIENT_ADDRESS');

new.jrn_lasttransaction = rdb$get_context('USER_TRANSACTION', 'Trn_ID');

end ^

commit ^

execute procedure set_context('skidder', 1) ^

insert into journal(jrn_id) values(0) ^

set term ;^

Since rdb$set_context returns 1 or zero, it can be made to work with a simple SELECT statement.

Example

SQL> select rdb$set_context('USER_SESSION', 'Nickolay', 'ru')

CNT> from rdb$database;

RDB$SET_CONTEXT

===============

0

0 means not defined already; we have set it to 'ru'

SQL> select rdb$set_context('USER_SESSION', 'Nickolay', 'ca')

CNT> from rdb$database;

RDB$SET_CONTEXT

===============

1

1 means it was defined already; we have changed it to 'ca'

SQL> select rdb$set_context('USER_SESSION', 'Nickolay', NULL)

CNT> from rdb$database;

RDB$SET_CONTEXT

===============

1

1 says it existed before; we have changed it to NULL, i.e. undefined it.

SQL> select rdb$set_context('USER_SESSION', 'Nickolay', NULL)

CNT> from rdb$database;

RDB$SET_CONTEXT

===============

0

0, since nothing actually happened this time: it was already undefined .

Plan fragments are propagated to nested levels of joins, enabling manual optimization of complex outer joins

A user-supplied plan will be checked for correctness in outer joins

Short-circuit optimization for user-supplied plans has been added

A user-specified access path can be supplied for any SELECT-based statement or clause

Syntax rules

The following schema describing the syntax rules should be helpful when composing plans.

PLAN ( { <stream_retrieval> | <sorted_streams> | <joined_streams> } )

<stream_retrieval> ::= { <natural_scan> | <indexed_retrieval> |

<navigational_scan> }

<natural_scan> ::= <stream_alias> NATURAL

<indexed_retrieval> ::= <stream_alias> INDEX ( <index_name>

[, <index_name> ...] )

<navigational_scan> ::= <stream_alias> ORDER <index_name>

[ INDEX ( <index_name> [, <index_name> ...] ) ]

<sorted_streams> ::= SORT ( <stream_retrieval> )

<joined_streams> ::= JOIN ( <stream_retrieval>, <stream_retrieval>

[, <stream_retrieval> ...] )

| [SORT] MERGE ( <sorted_streams>, <sorted_streams> )

Details

Natural scan means that all rows are fetched in their natural storage order. Thus, all pages must be read before search criteria are validated.

Indexed retrieval uses an index range scan to find row ids that match the given search criteria. The found matches are combined in a sparse bitmap which is sorted by page numbers, so every data page will be read only once. After that the table pages are read and required rows are fetched from them.

Navigational scan uses an index to return rows in the given order, if such an operation is appropriate.-

The index b-tree is walked from the leftmost node to the rightmost one.

If any search criterion is used on a column specified in an ORDER BY clause, the navigation is limited to some subtree path, depending on a predicate.

If any search criterion is used on other columns which are indexed, then a range index scan is performed in advance and every fetched key has its row id validated against the resulting bitmap. Then a data page is read and the required row is fetched.

Note that a navigational scan incurs random page I/O, as reads are not optimized.

A sort operation performs an external sort of the given stream retrieval.

A join can be performed either via the nested loops algorithm (JOIN plan) or via the sort merge algorithm (MERGE plan).-

An inner nested loop join may contain as many streams as are required to be joined. All of them are equivalent.

An outer nested loops join always operates with two streams, so you'll see nested JOIN clauses in the case of 3 or more outer streams joined.

A sort merge operates with two input streams which are sorted beforehand, then merged in a single run.

Examples

SELECT RDB$RELATION_NAME

FROM RDB$RELATIONS

WHERE RDB$RELATION_NAME LIKE 'RDB$%'

PLAN (RDB$RELATIONS NATURAL)

ORDER BY RDB$RELATION_NAME

SELECT R.RDB$RELATION_NAME, RF.RDB$FIELD_NAME

FROM RDB$RELATIONS R

JOIN RDB$RELATION_FIELDS RF

ON R.RDB$RELATION_NAME = RF.RDB$RELATION_NAME

PLAN MERGE (SORT (R NATURAL), SORT (RF NATURAL))

Notes

A PLAN clause may be used in all select expressions, including subqueries, derived tables and view definitions. It can be also used in UPDATE and DELETE statements, because they're implicitly based on select expressions.

If a PLAN clause contains some invalid retrieval description, then either an error will be returned or this bad clause will be silently ignored, depending on severity of the issue.

ORDER <navigational_index> INDEX ( <filter_indices> ) kind of plan is reported by the engine and can be used in the user-supplied plans starting with FB 2.0.

Some useful improvements have been made to SQL sorting operations:

Column aliases are now allowed in both these clauses.

Examples:

ORDER BY

SELECT RDB$RELATION_ID AS ID

FROM RDB$RELATIONS

ORDER BY ID

GROUP BY

SELECT RDB$RELATION_NAME AS ID, COUNT(*)

FROM RDB$RELATION_FIELDS

GROUP BY ID

A GROUP BY condition can now be any valid expression.

Example

...

GROUP BY

SUBSTRING(CAST((A * B) / 2 AS VARCHAR(15)) FROM 1 FOR 2)

Order by degree (ordinal column position) now works on a select * list.

Example

SELECT *

FROM RDB$RELATIONS

ORDER BY 9

According to grammar rules, since v.1.5, ORDER BY <value_expression> is allowed and <value_expression> could be a variable or a parameter. It is tempting to assume that ORDER BY <degree_number> could thus be validly represented as a replaceable input parameter, or an expression containing a parameter.

However, while the DSQL parser does not reject the parameterised ORDER BY clause expression if it resolves to an integer, the optimizer requires an absolute, constant value in order to identify the position in the output list of the ordering column or derived field. If a parameter is accepted by the parser, the output will undergo a “dummy sort” and the returned set will be unsorted.

Added SQL-99 compliant NEXT VALUE FOR <sequence_name> expression as a synonym for GEN_ID(<generator-name>, 1), complementing the introduction of CREATE SEQUENCE syntax as the SQL standard equivalent of CREATE GENERATOR.

Examples

1.

SELECT GEN_ID(S_EMPLOYEE, 1) FROM RDB$DATABASE;

2.

INSERT INTO EMPLOYEE (ID, NAME)

VALUES (NEXT VALUE FOR S_EMPLOYEE, 'John Smith');

Currently, increment ("step") values not equal to 1 (one) can be used only by calling the GEN_ID function. Future versions are expected to provide full support for SQL-99 sequence generators, which allows the required increment values to be specified at the DDL level. Unless there is a vital need to use a step value that is not 1, use of a NEXT VALUE FOR value expression instead of the GEN_ID function is recommended.

GEN_ID(<name>, 0) allows you to retrieve the current sequence value, but it should never be used in insert/update statements, as it produces a high risk of uniqueness violations in a concurrent environment.

The RETURNING clause syntax has been implemented for the INSERT statement, enabling the return of a result set from the INSERT statement. The set contains the column values actually stored. Most common usage would be for retrieving the value of the primary key generated inside a BEFORE-trigger.

Available in DSQL and PSQL.

Syntax Pattern

INSERT INTO ... VALUES (...) [RETURNING <column_list> [INTO <variable_list>]]

Example(s)

1.

INSERT INTO T1 (F1, F2)

VALUES (:F1, :F2)

RETURNING F1, F2 INTO :V1, :V2;

2.

INSERT INTO T2 (F1, F2)

VALUES (1, 2)

RETURNING ID INTO :PK;

The INTO part (i.e. the variable list) is allowed in PSQL only (to assign local variables) and rejected in DSQL.

In DSQL, values are being returned within the same protocol roundtrip as the INSERT itself is executed.

If the RETURNING clause is present, then the statement is described as isc_info_sql_stmt_exec_procedure by the API (instead of isc_info_sql_stmt_insert), so the existing connectivity drivers should support this feature automagically.

Any explicit record change (update or delete) performed by AFTER-triggers is ignored by the RETURNING clause.

Cursor based inserts (INSERT INTO ... SELECT ... RETURNING ...) are not supported.

This clause can return table column values or arbitrary expressions.

Alias handling and ambiguous field detecting have been improved. In summary:

When a table alias is provided for a table, either that alias, or no alias, must be used. It is no longer valid to supply only the table name.

Ambiguity checking now checks first for ambiguity at the current level of scope, making it valid in some conditions for columns to be used without qualifiers at a higher scope level.

Examples

When an alias is present it must be used; or no alias at all is allowed.

This query was allowed in FB1.5 and earlier versions:

SELECT

RDB$RELATIONS.RDB$RELATION_NAME

FROM

RDB$RELATIONS R

but will now correctly report an error that the field "RDB$RELATIONS.RDB$RELATION_NAME" could not be found.

Use this (preferred):

SELECT

R.RDB$RELATION_NAME

FROM

RDB$RELATIONS R

or this statement:

SELECT

RDB$RELATION_NAME

FROM

RDB$RELATIONS R

The statement below will now correctly use the FieldID from the subquery and from the updating table:

UPDATE

TableA

SET

FieldA = (SELECT SUM(A.FieldB) FROM TableA A

WHERE A.FieldID = TableA.FieldID)

In Firebird it is possible to provide an alias in an update statement, but many other database vendors do not support it. These SQL statements will improve the interchangeability of Firebird's SQL with other SQL database products.

This example did not run correctly in Firebird 1.5 and earlier:

SELECT

RDB$RELATIONS.RDB$RELATION_NAME,

R2.RDB$RELATION_NAME

FROM

RDB$RELATIONS

JOIN RDB$RELATIONS R2 ON

(R2.RDB$RELATION_NAME = RDB$RELATIONS.RDB$RELATION_NAME)

If RDB$RELATIONS contained 90 records, it would return 90 * 90 = 8100 records, but in Firebird 2 it will correctly return 90 records.

This failed in Firebird 1.5, but is possible in Firebird 2:

SELECT

(SELECT RDB$RELATION_NAME FROM RDB$DATABASE)

FROM

RDB$RELATIONS

Ambiguity checking in subqueries: the query below would run in Firebird 1.5 without reporting an ambiguity, but will report it in Firebird 2:

SELECT

(SELECT

FIRST 1 RDB$RELATION_NAME

FROM

RDB$RELATIONS R1

JOIN RDB$RELATIONS R2 ON

(R2.RDB$RELATION_NAME = R1.RDB$RELATION_NAME))

FROM

RDB$DATABASE

About the semantics

A select statement is used to return data to the caller (PSQL module or the client program)

Select expressions retrieve parts of data that construct columns that can be in either the final result set or in any of the intermediate sets. Select expressions are also known as subqueries.

Syntax rules

<select statement> ::=

<select expression> [FOR UPDATE] [WITH LOCK]

<select expression> ::=

<query specification> [UNION [{ALL | DISTINCT}] <query specification>]

<query specification> ::=

SELECT [FIRST <value>] [SKIP <value>] <select list>

FROM <table expression list>

WHERE <search condition>

GROUP BY <group value list>

HAVING <group condition>

PLAN <plan item list>

ORDER BY <sort value list>

ROWS <value> [TO <value>]

<table expression> ::=

<table name> | <joined table> | <derived table>

<joined table> ::=

{<cross join> | <qualified join>}

<cross join> ::=

<table expression> CROSS JOIN <table expression>

<qualified join> ::=

<table expression> [{INNER | {LEFT | RIGHT | FULL} [OUTER]}] JOIN <table expression>

ON <join condition>

<derived table> ::=

'(' <select expression> ')'

Conclusions

FOR UPDATE mode and row locking can only be performed for a final dataset, they cannot be applied to a subquery

Unions are allowed inside any subquery

Clauses FIRST, SKIP, PLAN, ORDER BY, ROWS are allowed for any subquery

Notes

Either FIRST/SKIP or ROWS is allowed, but a syntax error is thrown if you try to mix the syntaxes

An INSERT statement accepts a select expression to define a set to be inserted into a table. Its SELECT part supports all the features defined for select statments/expressions

UPDATE and DELETE statements are always based on an implicit cursor iterating through its target table and limited with the WHERE clause. You may also specify the final parts of the select expression syntax to limit the number of affected rows or optimize the statement.

Clauses allowed at the end of UPDATE/DELETE statements are PLAN, ORDER BY and ROWS.

Table of Contents

The following keywords have been added, or have changed status, since Firebird 1.5. Those marked with an asterisk (*) are not present in the SQL standard.

BIT_LENGTH

BOTH

CHAR_LENGTH

CHARACTER_LENGTH

CLOSE

CROSS

FETCH

LEADING

LOWER

OCTET_LENGTH

OPEN

ROWS

TRAILING

TRIM

BACKUP *

BLOCK *

COLLATION

COMMENT *

DIFFERENCE *

IIF *

NEXT

SCALAR_ARRAY *

SEQUENCE

RESTART

RETURNING *

Table of Contents

The following enhancements have been made to the PSQL language extensions for stored procedures and triggers:

ROW_COUNT has been enhanced so that it can now return the number of rows returned by a SELECT statement.

For example, it can be used to check whether a singleton SELECT INTO statement has performed an assignment:

..

BEGIN

SELECT COL FROM TAB INTO :VAR;

IF (ROW_COUNT = 0) THEN

EXCEPTION NO_DATA_FOUND;

END

..

See also its usage in the examples below for explicit PSQL cursors.

It is now possible to declare and use multiple cursors in PSQL. Explicit cursors are available in a DSQL EXECUTE BLOCK structure as well as in stored procedures and triggers.

Syntax pattern

DECLARE [VARIABLE] <cursor_name> CURSOR FOR ( <select_statement> );

OPEN <cursor_name>;

FETCH <cursor_name> INTO <var_name> [, <var_name> ...];

CLOSE <cursor_name>;

Examples

1.

DECLARE RNAME CHAR(31);

DECLARE C CURSOR FOR ( SELECT RDB$RELATION_NAME

FROM RDB$RELATIONS );

BEGIN

OPEN C;

WHILE (1 = 1) DO

BEGIN

FETCH C INTO :RNAME;

IF (ROW_COUNT = 0) THEN

LEAVE;

SUSPEND;

END

CLOSE C;

END

2.

DECLARE RNAME CHAR(31);

DECLARE FNAME CHAR(31);

DECLARE C CURSOR FOR ( SELECT RDB$FIELD_NAME

FROM RDB$RELATION_FIELDS

WHERE RDB$RELATION_NAME = :RNAME

ORDER BY RDB$FIELD_POSITION );

BEGIN

FOR

SELECT RDB$RELATION_NAME

FROM RDB$RELATIONS

INTO :RNAME

DO

BEGIN

OPEN C;

FETCH C INTO :FNAME;

CLOSE C;

SUSPEND;

END

END

Cursor declaration is allowed only in the declaration section of a PSQL block/procedure/trigger, as with any regular local variable declaration.

Cursor names are required to be unique in the given context. They must not conflict with the name of another cursor that is "announced", via the AS CURSOR clause, by a FOR SELECT cursor. However, a cursor can share its name with any other type of variable within the same context, since the operations available to each are different.

Positioned updates and deletes with cursors using the WHERE CURRENT OF clause are allowed.

Attempts to fetch from or close a FOR SELECT cursor are prohibited.

Attempts to open a cursor that is already open, or to fetch from or close a cursor that is already closed, will fail.

All cursors which were not explicitly closed will be closed automatically on exit from the current PSQL block/procedure/trigger.

The ROW_COUNT system variable can be used after each FETCH statement to check whether any row was returned.

Defaults can now be declared for stored procedure arguments.

The syntax is the same as a default value definition for a column or domain, except that you can use '=' in place of 'DEFAULT' keyword.

Arguments with default values must be last in the argument list; that is, you cannot declare an argument that has no default value after any arguments that have been declared with default values. The caller must supply the values for all of the arguments preceding any that are to use their defaults.

For example, it is illegal to do something like this: supply arg1, arg2, miss arg3,

set arg4...

Substitution of default values occurs at run-time. If you define a procedure with defaults (say P1), call it from another procedure (say P2) and skip some final, defaulted arguments, then the default values for P1 will be substituted by the engine at time execution P1 starts. This means that, if you change the default values for P1, it is not necessary to recompile P2.

However, it is still necessary to disconnect all client connections, as discussed in the Borland InterBase 6 beta "Data Definition Guide" (DataDef.pdf), in the section "Altering and dropping procedures in use".

Examples

CONNECT ... ;

SET TERM ^;

CREATE PROCEDURE P1 (X INTEGER = 123)

RETURNS (Y INTEGER)

AS

BEGIN

Y = X;

SUSPEND;

END ^

COMMIT ^

SET TERM ;^

SELECT * FROM P1;

Y

============

123

EXECUTE PROCEDURE P1;

Y

============

123

SET TERM ^;

CREATE PROCEDURE P2

RETURNS (Y INTEGER)

AS

BEGIN

FOR SELECT Y FROM P1 INTO :Y

DO SUSPEND;

END ^

COMMIT ^

SET TERM ;^

SELECT * FROM P2;

Y